The Philosopher, the Bot, the Student, His Essay

June 01, 2023

The Philosopher, the Bot, the Student, His Essay

Allen Stairs

In 1950, Alan Turing introduced what came to be called the Turing Test. Suppose you have a "conversation" with a computer. If the output is indistinguishable from an intelligent human, the computer, says Turing, is artificially intelligent. On November 30 2022, OpenAI released ChatGPT, a web-available program that lets you enter queries and get back plain-English responses. When I asked ChatGPT how such programs work, it said

You can provide the model with a piece of text (a "prompt"), and it will generate a continuation of this text. The model uses the patterns it learned during training to generate this output.

Overall, ChatGPT doesn't quite pass the Turing test, but it comes eerily close. I once caught myself worrying that the bot would get annoyed by all my questions. Some conversations reportedly have taken a dark turn, but the tone in all my interactions has stayed chipper. I once asked how to fold a fitted sheet. After some (heh) chatty instructions, it added: "It may take a little practice, but with time, you'll be able to fold fitted sheets like a pro!" I've never wanted to be a professional sheet folder, but still….

Darren Hick had a more interesting experience. Hick received his PhD from the Maryland Philosophy Department in 2008. He teaches at Furman University and works on questions at the intersection of art, intellectual property and artistic appropriation. His 2017 book Artistic License: The Philosophical Problems of Copyright and Appropriation has been lavishly praised: "A short review cannot do full justice to the richness of Hick's work," says James O. Young in the Journal of Aesthetics and Art Criticism. Hick is also the author of the widely-used Introducing Aesthetics and the Philosophy of Art.

In mid-December of 2022, Hick was grading papers. One was on the paradox of horror — why we take pleasure in horror fiction. In the 18th century, David Hume discussed a related puzzle about tragedy; the student's essay was on Hume and the Paradox of Horror. It was well-written, described the paradox correctly, but then, says Hick, "the rest was BS." Hick suspected bot authorship. He asked ChatGPT "What does David Hume say about the paradox of tragedy?" and got back a short essay. The Regenerate button produced several variations, all similar to the suspect essay . Hick tried a ChatGPT detector. The verdict: almost certainly produced by a computer. After an uncomfortable interview, the student confessed.

Hick recounted the episode on in his Facebook feed. The post went viral and Hick was interviewed by major news outlets, including CBS, Business Insider, and ABC in Australia. ChatGPT was blissfully unaware. When I asked the program in March who Darren Hudson Hick is, it pleaded ignorance. I added that Darren Hick is a philosopher and got a reply that confused Hick with another philosopher who works on completely different topics. Since the basic version of ChatGPT I was using can't go on the internet (it was a trained on a database that only goes through the end of 2021), that might explain not knowing who Darren Hick is, but not why it made things up .

One possible explanation has some philosophical interest. Hick described most of the ChatGPT-produced essay as BS, and there's a theory to go with that. In a 1986 essay, Harry Frankfurt analyzes how BS differs from mere dishonesty (The essay first appeared as "On Bullshit" in The Raritan Quarterly Review in 1986. It was republished as a book in 2005 by Princeton University Press). On Frankfurt's account, to BS is to speak without caring whether what one says is true or false — worse than lying, in Frankfurt's opinion. ChatGPT doesn't care, and not just because computers don't care about anything. Truth is irrelevant to the program. It's just going It just goes from sentence to sentence in the manner of the examples it was trained on.

As an experiment, I asked ChatGPT who the philosopher Allen Stairs is. Almost everything it said was wrong. Of course, ChatGPT doesn't mean to ignore the truth, and often, the output it provides can be useful and nuanced. Not only does ChatGPT know how to fold a fitted sheet or produce (yes, really) a passable account of quantum decoherence. When I asked it whether the description of Henry Crawford as "black" in Jane Austen's Mansfield Park has a racial meaning, it produced a well-reasoned, appropriately hedged argument that the answer is probably no.

This is partly alethic luck. The database has enough of the right stuff that ChatGPT's output is often truthy or even true. More recently, OpenAI opened the program up to roaming the internet. With the right auxiliary apps, ChatGPT actually now tells you true, informative things about Darren Hick. How the program will fare on controversial questions remains to be seen. Of course many human web searches induce strange beliefs in the seeker, but it would be unwise to predict that bot programs will never do better. After all, when we try, most of us can fact-check. However we manage this, it's not by magic. Machine learning programs — ChatGPT is an example — already produces remarkable results. When asked for examples , ChatGPT points to image recognition, medical diagnosis, financial fraud detection, and sentiment analysis in texts.

This is remarkable but also worrisome. Daniel Dennett points out that off-the-shelf AI tools already make it possible to create what he calls counterfeit people. Those creations, Dennett writes, "are the most dangerous artifacts in human history, capable of destroying not just economies but human freedom itself." This does not strike me as hyperbole. It's not clear that our hard-wired epistemic equipment is suited for the world we're making.

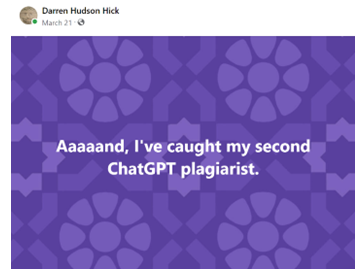

Compared to Dennett's worry concern, catching plagiarism by bot is low on the worry list, partly because at least for now, we can still do that. There's this from Hick's Facebook feed in March: